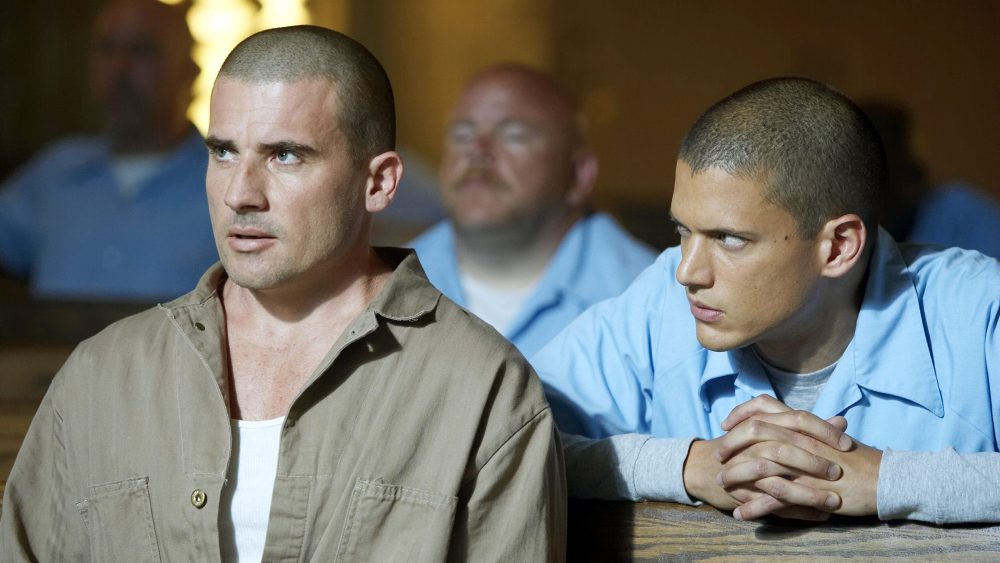

OpenAI appears to have calmed fears around Sora 2, winning over SAG-AFTRA, CAA, UTA and actor Bryan Cranston with new guardrails on the platform to protect actors’ voices and likenesses.

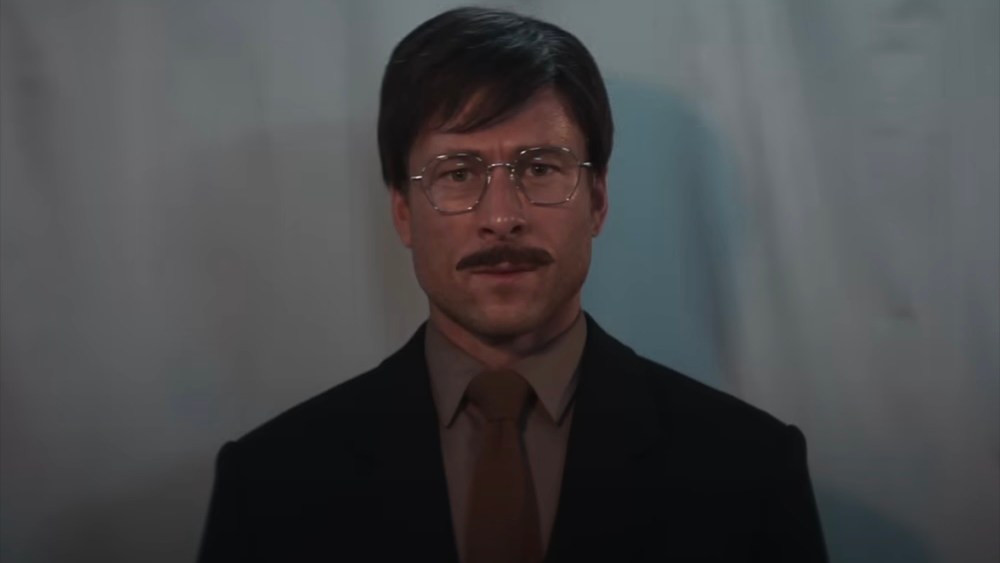

Cranston was among those who raised concerns when the initial launch of the AI video platform allowed users to create his image without his permission. In a statement issued by the actors’ union on Monday, Cranston thanked OpenAI for updating Sora 2 to add new protections.

“I was deeply concerned not just for myself, but for all performers whose work and identity can be misused in this way,” Cranston said. “I am grateful to OpenAI for its policy and for improving its guardrails, and hope that they and all of the companies involved in this work, respect our personal and professional right to manage replication of our voice and likeness.”

CAA and UTA were out front in raising alarms about Sora 2 earlier this month, when the initial rollout allowed users to create AI video clips using copyrighted characters. Since then, OpenAI has been in damage control mode and has worked with industry stakeholders to try to fix the situation.

SAG-AFTRA and the agencies noted that the company has engaged in “productive collaboration” to protect actors’ rights. In a statement, Sean Astin, the new president of the union, noted that actors face a risk of “massive misappropriation” from AI.

“Bryan did the right thing by communicating with his union and his professional representatives to have the matter addressed,” Astin said. “This particular case has a positive resolution. I’m glad that OpenAI has committed to using an opt-in protocol, where all artists have the ability to choose whether they wish to participate in the exploitation of their voice and likeness using A.I.”

OpenAI previously endorsed the No Fakes Act, a federal law that would ban non-consensual digital replicas. SAG-AFTRA has made passage of that law one of its highest legislative priorities. Under current law, actors have voice and likeness protections in California and other states, but not on the federal level.

“OpenAI is deeply committed to protecting performers from the misappropriation of their voice and likeness,” Sam Altman, the CEO, said in the joint statement on Monday. “We were an early supporter of the NO FAKES Act when it was introduced last year, and will always stand behind the rights of performers.”

Sora 2 allows performers to “opt in” to having their likenesses used on the platform. But the initial release allowed users to manipulate the images of actors who had not opted in — raising fears that the system was functionally an “opt out.” Altman later said that OpenAI was tweaking the platform to give copyright holders and performers greater control over how their images are used.

Leave a Reply