Kyle Grillot | Bloomberg | Getty Images

The artificial intelligence startup has expanded its safety controls in recent months as it faced mounting scrutiny over how it protects users, particularly minors.

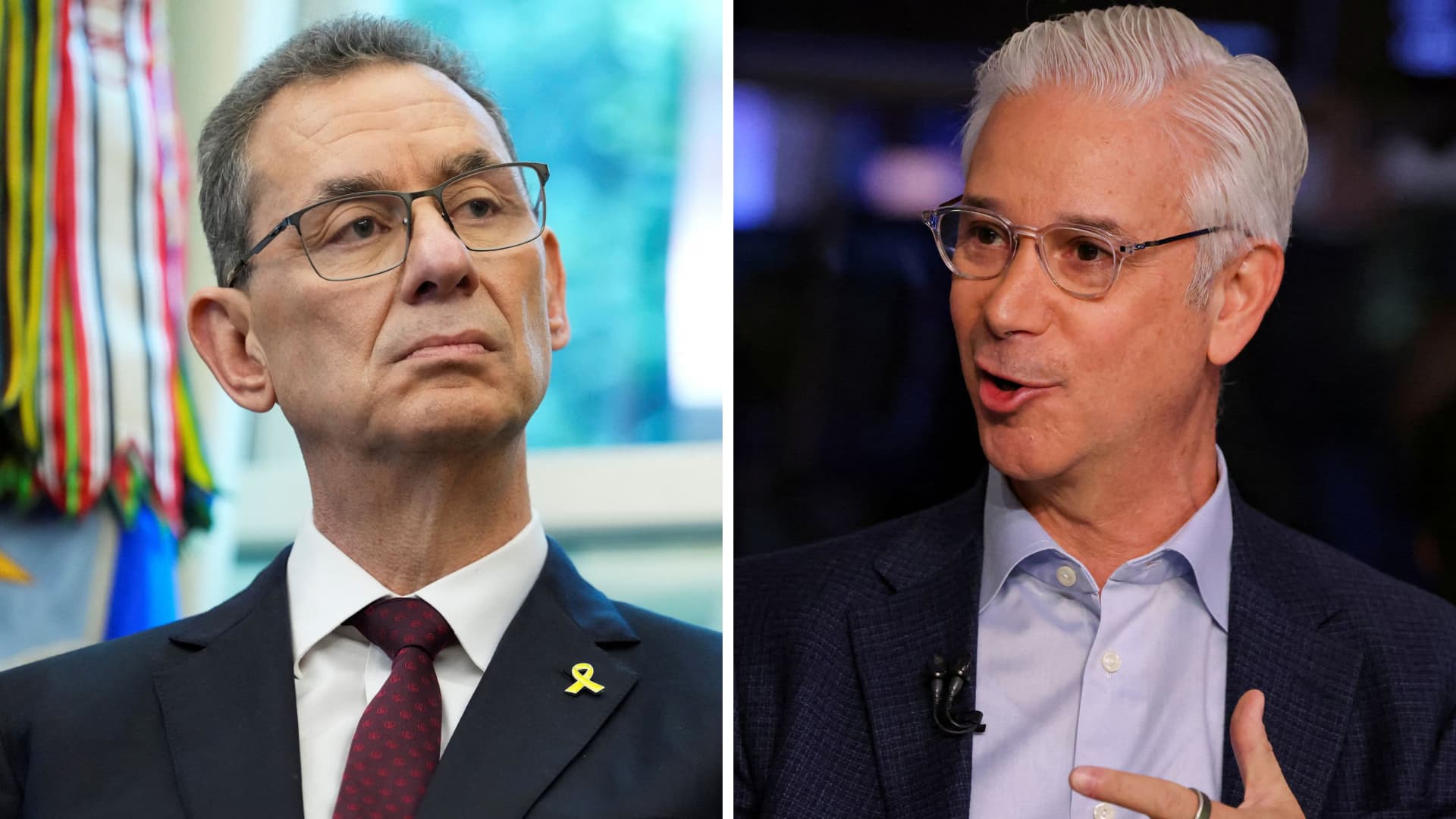

But Altman said Tuesday in a post on X that OpenAI will be able to “safely relax” most restrictions now that it has new tools and has been able to mitigate “serious mental health issues.”

In December, Altman said it will allow more content, including erotica, on ChatGPT for “verified adults.”

Altman tried to clarify the move in a post on X on Wednesday, saying OpenAI cares “very much about the principle of treating adult users like adults,” but it will still not allow “things that cause harm to others.”

“In the same way that society differentiates other appropriate boundaries (R-rated movies, for example) we want to do a similar thing here,” Altman wrote.

The posts are at odds with comments Altman made during a podcast appearance in August, where he said he was “proud” of OpenAI’s ability to resist certain features, like a “sex bot avatar,” that could boost engagement on ChatGPT.

“There’s a lot of short-term stuff we could do that would really juice growth or revenue and be very misaligned with that long-term goal,” Altman said.

In September, the Federal Trade Commission launched an inquiry into OpenAI and other tech companies over how chatbots like ChatGPT could negatively affect children and teenagers. OpenAI is also named in a wrongful death lawsuit with a family who blamed ChatGPT for their teenage son’s death by suicide.

The company has taken several public steps to enhance safety on ChatGPT in the months following the inquiry and the lawsuit. It launched a series of parental controls late last month, and it is building an age prediction system that will automatically apply teen-appropriate settings for users under 18.

On Tuesday, OpenAI announced assembled a council of eight experts who will provide insight into how AI impacts users’ mental health, emotions and motivation. Altman posted about the company’s aim to loosen restrictions that same day, sparking confusion and swift backlash on social media.

Altman said it “blew up” much more than he was expecting.

His post also caught the attention of advocacy groups like the National Center on Sexual Exploitation, which called on OpenAI to reverse its decision to allow erotica on ChatGPT.

“Sexualized AI chatbots are inherently risky, generating real mental health harms from synthetic intimacy; all in the context of poorly defined industry safety standards,” Haley McNamara, NCOSE’s executive director, said in a statement on Wednesday.

If you are having suicidal thoughts or are in distress, contact the Suicide & Crisis Lifeline at 988 for support and assistance from a trained counselor

WATCH: AI is not in a bubble, valuations look ‘pretty reasonable’, says BlackRock

Leave a Reply